Salesforce just revoked OAuth access for thousands of Gainsight integrations across customer orgs. (Source: Salesforce Security Bulletin)

Here’s what it means for your health scores, renewals, and exec trust.

TL;DR

Salesforce found suspicious activity linked to Gainsight apps that connect into Salesforce.

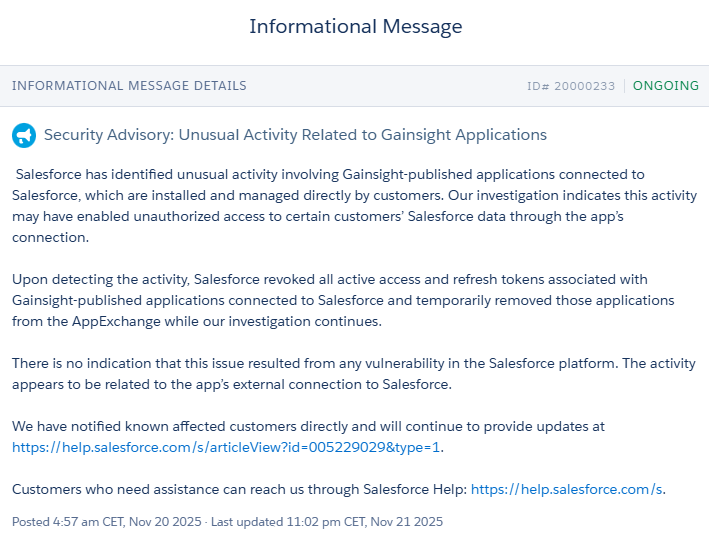

Here’s the official advisory Salesforce issued to customers:

To contain risk, Salesforce:

Turned off those apps for now

Cancelled the “keys” that those apps use to access customer data

Is still investigating what happened

Early signs indicate an incident in the integration layer, rather than a core Salesforce platform flaw.

The Story

Like most enterprise CS platforms, Gainsight connects to Salesforce to sync:

Product usage

Accounts and contacts

Opportunities and renewals

Then it turns that into health scores, CTAs, success plans, and QBR decks.

To do that, it uses special “access keys” (OAuth tokens). Think of them like a valet key to your car:

You don’t give the full login

You give a key that lets the app do specific actions on your behalf

If that key is stolen or misused, someone can perform any action that the app was authorized to do within Salesforce.

So Salesforce did what any serious platform should:

Cancelled those keys

Paused the apps while they investigate

Started reviewing logs and activity patterns

This is part of a broader trend: in the last year, many SaaS incidents came from third-party connections, not from the main product itself.

Why This Matters For CS, RevOps, and GTM Leaders

Your CS strategy depends on these connections working and staying trustworthy:

Health scores updating on time

CTAs firing when usage drops

Playbooks running before renewals

QBR reports reflecting reality

When a major integration is paused or reset:

Health scores go stale

CTAs stop firing or fire late

Dashboards stop matching what is really happening in accounts

Executives start asking:

“How exposed are we?”

“Who owns this risk?”

“What’s the plan if this happens again?”

If you’re comparing tools like Gainsight, Salesforce, HubSpot or others, the Customer Success Platform Comparison helps you look at them through a simple lens: data in, data out, and how much risk each connection adds.

What You Should Do In The Next 72 Hours

Incidents like this aren’t about one vendor; they’re about how the entire CS ecosystem depends on secure, predictable data flows across platforms.

This is the playbook for non-technical leaders. You own the approach; your admin or architect owns the clicks.

1. Make a clean list of what is connected

Ask your Salesforce / CS Ops owners for:

Every Gainsight app or integration connected to Salesforce

What each one is used for, in plain language

Which teams rely on which flows (health, renewals, expansions, exec reporting)

Turn this into a one-page map. You want to see where your revenue-critical dependencies are.

2. Reset the “keys” and system logins

Ask your technical owner to:

Reconnect any affected apps

Regenerate the access keys (tokens)

Change any shared integration passwords

Turn on multi-factor authentication for any “system” accounts

If your team needs a simple checklist to keep Sales and CS aligned during messy periods, use the structure from the Sales-to-CS Handover Playbook so handoffs don’t collapse while systems are unstable.

3. Tighten who can see what

Most teams are still too generous with access.

Ask a simple question: “Which tools truly need full access, and which only need a few specific objects and fields?”

Then push your admin to:

Create a dedicated Integration User used only by tools, not humans

Give it the minimum permissions needed to run the workflows

Restrict where and how it can log in (SSO only, IP restrictions if possible)

If you want a guided way to automate more while tightening access, the Customer Success Lifecycle Automation Playbook walks through how to scale workflows without exploding risk.

For Your Admin: 3 Technical Checks to Run

You don’t need to do these yourself, but someone on your team must:

Review Connected Apps & OAuth usage

Check which apps used the integration user has used recently

Confirm that the revoked tokens and new tokens are in place

Inspect the recent API and export activity

Look for unusual spikes in API calls

Look for large report exports or bulk data pulls

Validate monitoring and alerts

Make sure there are alerts on failed logins, new tokens, and large exports

Confirm logs are being kept long enough to investigate issues

Keep this list in your incident notes so you can show executives you covered both the business side and the technical side.

Look For Suspicious Behaviour (But Don’t Panic)

You’re not trying to become a security engineer. You’re trying to answer two questions:

“Did anything unusual happen?”

“What did we confirm did not happen?”

Ask your Salesforce owner to check:

Recent logins for the integration user

Recent API activity driven by Gainsight apps

Any large exports in the last weeks

Then summarize what you found using a simple view from the CS Reporting Guide so you can show:

What was at risk

What was checked

What you changed

Control The Story With Your Customers

While systems are being checked and reconnected:

Give AMs and CSMs a short, shared script on what happened and what you’re doing

Flag metrics that might be incomplete in QBRs or EBRs

Proactively address concerns instead of waiting for customers to ask

To keep QBRs credible during incidents, use the structure from Transform Quarterly Business Reviews.

For high-stakes conversations, reuse the language patterns in Master Escalations, so your team sounds decisive, not defensive.

The Bigger Problem: Vendor Dependency You Don’t See

This incident is not just about Salesforce or Gainsight. It is about how fragile your stack becomes when:

One vendor connects to another

That connection has broad access

No one regularly reviews what access actually exists

For CS leaders, platform choice is now part of your risk posture. The Customer Success Platforms: Problems & Solutions guide calls out:

Tools that demand more access than they need

Weak or missing audit trails

Integrations that will be hard and risky to unwind later

What To Put In Front Of Executives (5 Slides, No Fluff)

You don’t need a 30-page deck. You need a short update that leads to decisions.

1. What happened

Salesforce detected unusual activity tied to Gainsight apps, cancelled access keys, and paused apps while investigating.

2. Our exposure

Which connections you had, what they were used for, and whether sensitive data (PII, financials) could have been touched.

3. Actions taken in 72 hours

Keys reset, accounts reviewed, monitoring set up, customer communications prepared.

4. Business impact

Any reporting gaps, health-score blind spots, and how the team is handling them in active deals and renewals.

5. Forward plan

Regular reviews of connected apps, clear vendor standards, and a tighter integration policy.

For a one-pager that leaders will actually read, you can reuse the structure from Executive Engagement Tactics.

Policy Upgrades You Should Steal

Translate “security” into habits your org can follow:

One system account per tool

No more random personal logins acting as integration users.

Regular key renewal

Treat access keys like credit cards: they expire and get reissued on a schedule.

Quarterly connected-app review

Every 3 months, ask: “Do we still need this? What access does it have? Who owns it?”

Export alerts

Alerts on large data exports or bulk pulls. If a giant export runs at 2 a.m., you want to know.

Vendor pack before buying

Before signing, ask vendors for security certifications, test summaries, simple data-flow diagrams, and a clear incident process.

To make this repeatable without extra headcount, adapt the checklists from Scale Customer Success Team Efficiency.

If You Use Gainsight (Or Any CS Platform) Right Now

Expect some friction when apps come back online:

Reconnection flows

Rules and mappings that need a sanity check

Health scores and CTAs with data gaps

Practical steps:

Label any backfilled metrics, so you know which date range was impacted

Note which dashboards and reports might be unreliable for that window

Document what changed, who approved it, and what checks were done

If leadership asks, “Can we still trust our health score?”, use the approach inside The Health Score That Predicts Renewals and the Retention Health Score Guide to reset or validate your model in a transparent way.

If this incident triggers a platform review, the AI + CRM Integration Playbook helps you test how each vendor handles permissions, logging, and failure modes in real, CS-focused scenarios.

What We Learned From Past Incidents

The Okta support-system breach in late 2023 showed how a single integration incident can ripple across GTM and CS teams.

The basics still work, even if you’re not technical:

Protect every important login with multi-factor

Give tools only the access they truly need

Rotate keys and passwords on a schedule

Watch for unusual data exports

Script tough customer conversations ahead of time

The Transform Customer Calls guide helps your team show up with clarity and value even when the news is uncomfortable.

Final Word

Use this incident to make your CS stack audit-ready and your customer conversations unshakeable.

If this breakdown helped you steady your team today, keep going. I share breakdowns like this to make you the most prepared operator in the room.

When you want deeper guidance — frameworks, scripts, and full incident playbooks — everything you need is already inside your CS Café account.

– Hakan | Founder, The Customer Success Café Weekly Newsletter